Holo2

At H, we provide the most performant and cost-efficient agents for enterprise workflows. Many of these workflows require interacting with a variety of enterprise systems and websites through the UI. That is why we are building computer-use agents that interact with computers just like humans do: by looking at their screens, clicking, typing, and navigating interfaces.

Two capabilities are essential for these computer-use agents: navigation, the ability to decide on the next actions, and user interface (UI) element localization, the ability to determine precise coordinates for a click. Over the last few months, we have made major advances towards this goal, both in agentic framework and vision-language models (VLMs).

On June 25, we released Holo1, our first series of agentic computer-use models, focused on the web. Holo1 models provided the most cost-effective agent for each performance target in web navigation.

Holo1.5, released on September 25, delivers state-of-the-art UI localization performance across multiple platforms. When used as a localizer in our Surfer 2 agent and combined with external proprietary navigator models, Holo1.5 reaches top performance across all four major computer-use benchmarks (see agent release), although at high costs.

Today, we bridge the gap between agent performance and cost-efficiency by releasing Holo2, a series of powerful lightweight models that can localize and navigate across different platforms: web, mobile, and computer, at a fraction of the original Surfer 2 cost.

Holo2

Available in 4B, 8B, and 30B-A3B sizes, Holo2 empowers users with an optimal balance between performance and efficiency. Holo2 models are based on the Qwen3-VL series and can serve as drop-in replacements for their predecessors, Holo1 and Holo1.5. Holo2 was trained on curated localization and agent datasets to retain strong UI grounding of Holo1.5 while boosting navigation capabilities. Find them on Hugging Face. Use them with our cookbook.

Multi-environment – Holo2 is our first series of models that thrives not just on the Web, but also across Desktop and Mobile computer-use platforms such as Ubuntu and Android.

Mixture-of-experts – Our flagship Holo2 30B-A3B leverages a Mixture-of-Experts (MoE) architecture, which makes it more efficient by activating only a subset of its parameters while delivering performance comparable to a full-size model.

Holo2 thinks – Using the thinking paradigm, self-generated reasoning tokens guide the model to more accurate and context-aware responses.

Built for agents – Holo2 was developed with our Surfer 2 agent architecture in mind (blog post, tech report), and as such can be integrated into any ReAct agentic pipeline.

Seamless deployment – The Holo2 models can be easily deployed in any inference framework supporting Qwen3-VL models, such as vLLM.

Permissive licenses – Both Holo2-4B and Holo2-8B are released under an Apache-2 license. Holo2-30B-A3B is available under non-commercial license: please reach out for commercial use.

Surfing with Holo2

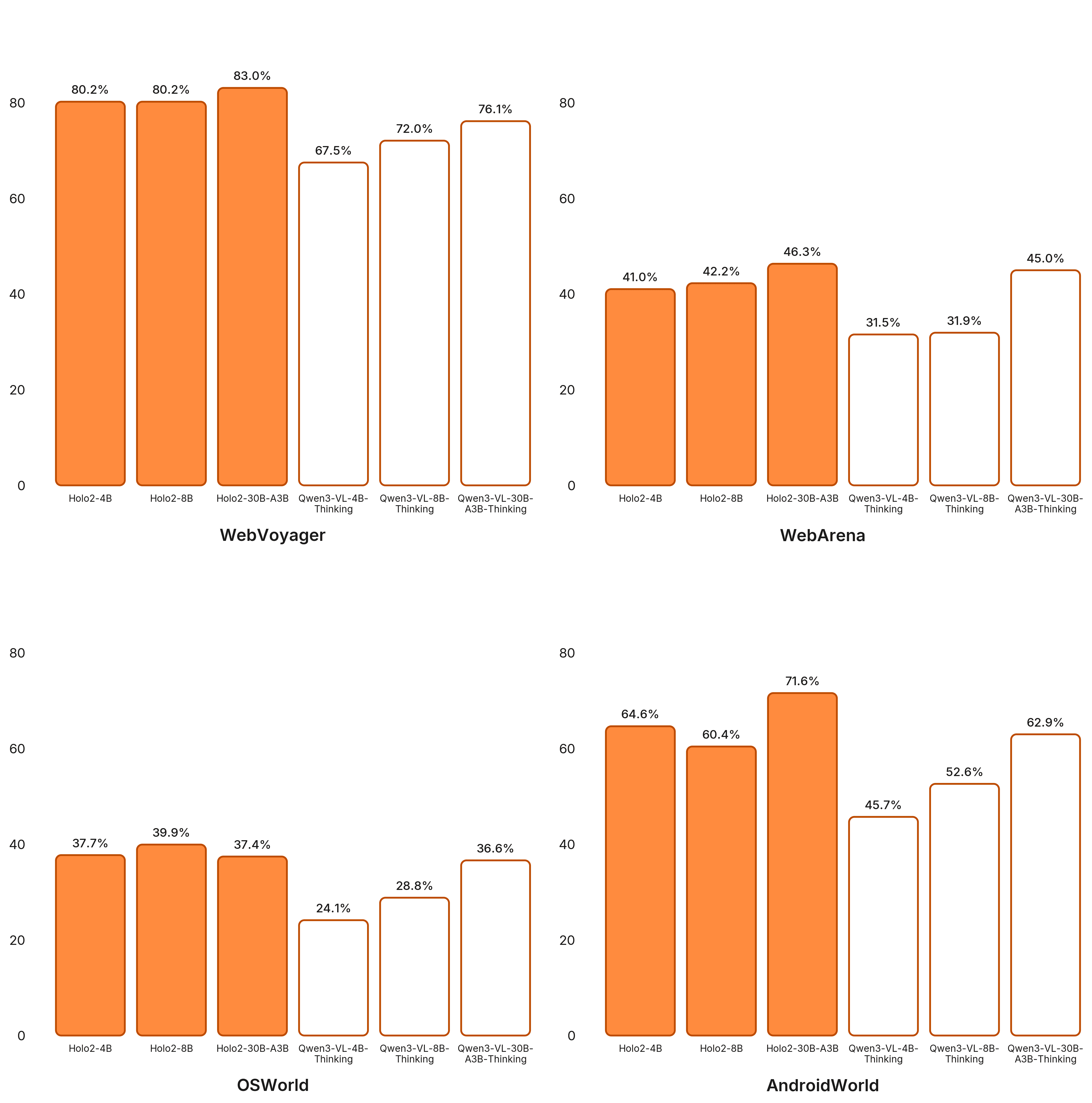

Powered by Holo2, Surfer 2 outperforms its predecessors as well as open-source baselines across all four major computer-use benchmarks: WebVoyager, WebArena, OSWorld, and AndroidWorld.

State-of-the-art UI Localization

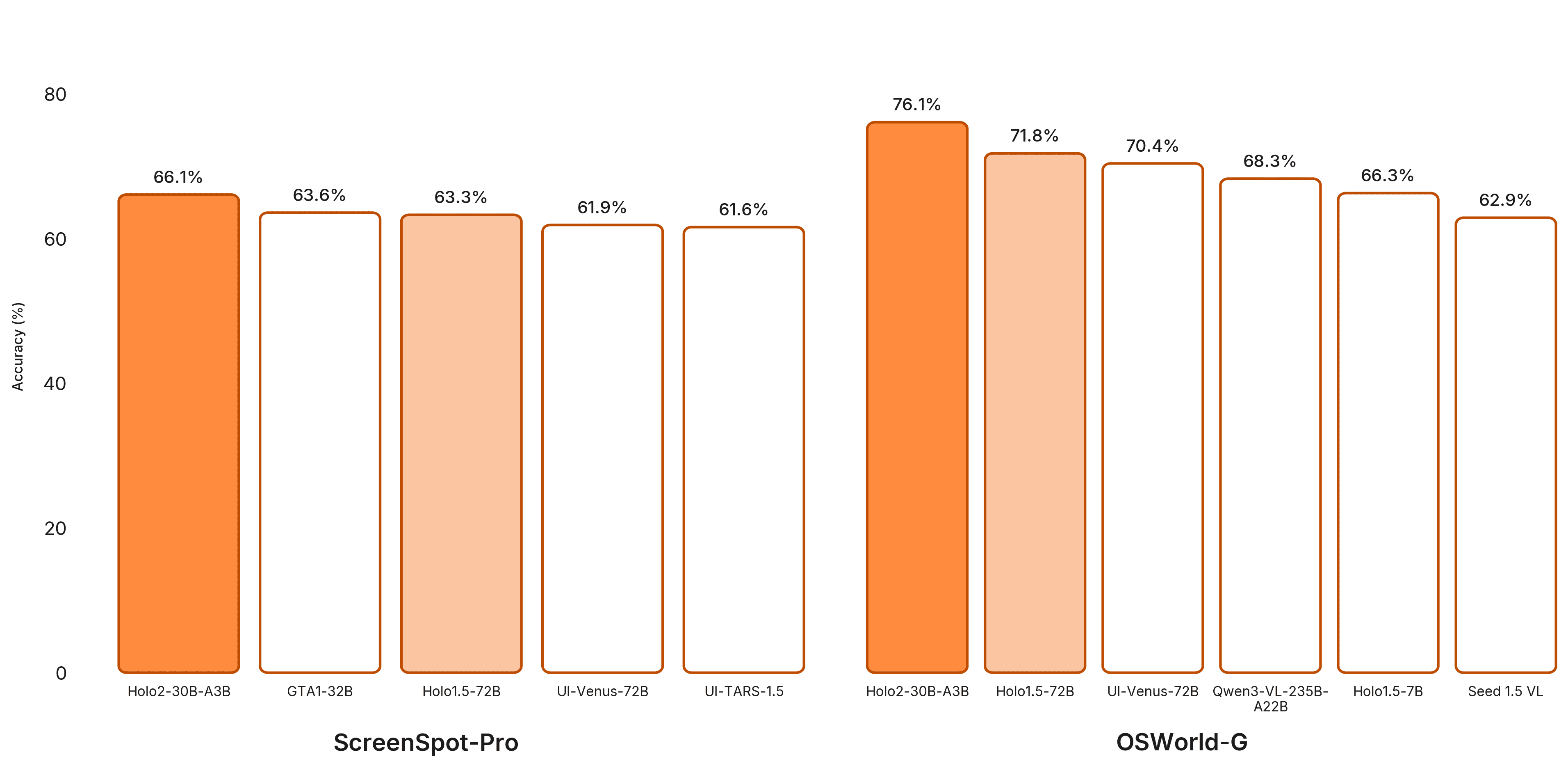

Holo2-30B-A3B sets new records across all publicly released UI localization models. In particular, it achieves 66.1% accuracy on ScreenSpot-Pro and 76.1% on OSWorld-G thanks to GRPO training tailored for localization.

At all sizes, Holo2 outperforms leading competitors in the established localization benchmarks.

The road ahead

We will keep leveraging and refining our training pipeline to continuously improve Holo and empower our agents to navigate increasingly complex business environments shaped by our customers’ needs. All of this, while pushing the cost/performance frontier of our agents.

This is just the beginning. Stay tuned.